Problem

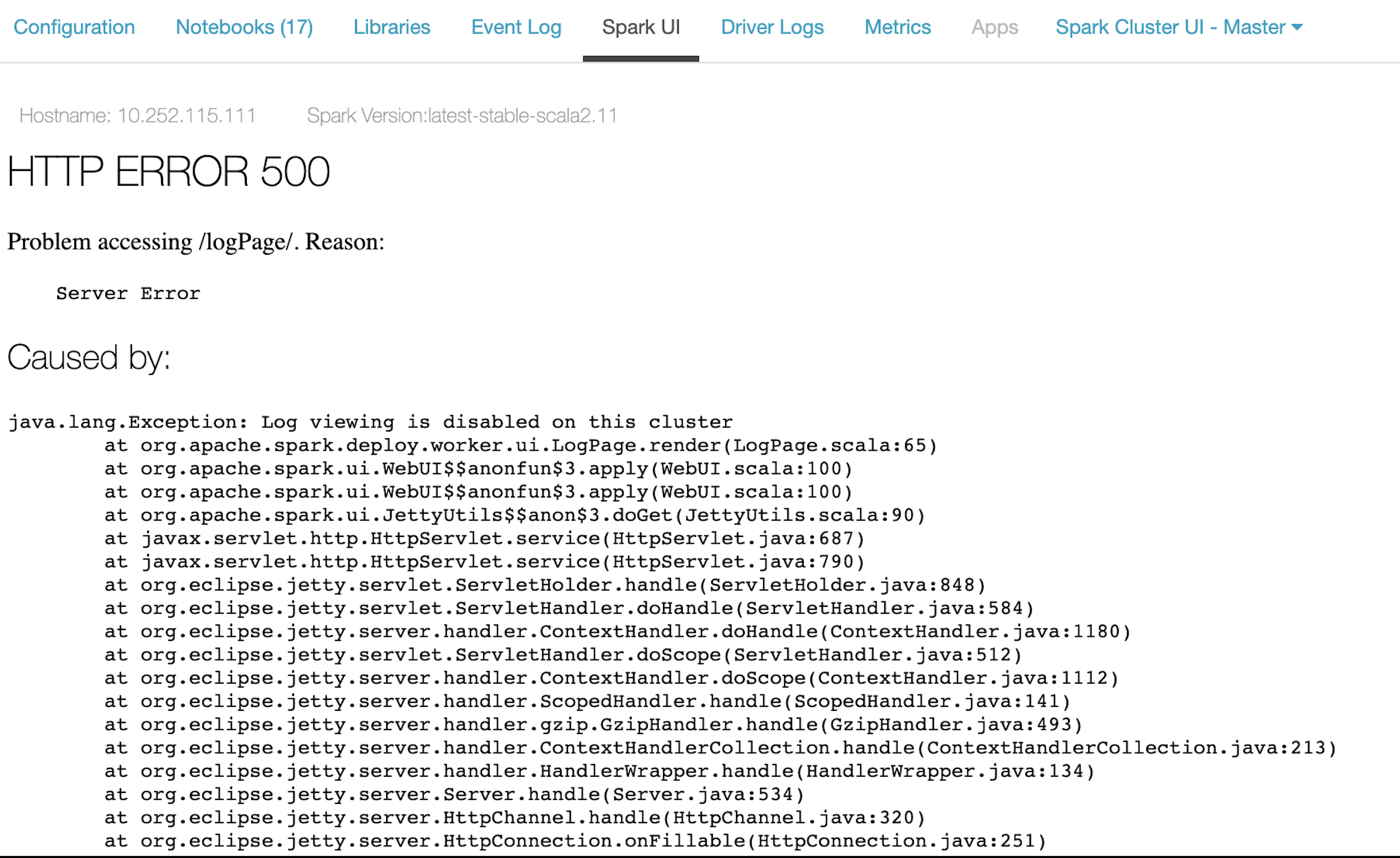

Users of Shared access mode clusters experience intermittent HTTP 500 errors when trying to view task logs in the Apache Spark UI. This also applies to admins.

ErrorCaused by:java.lang.Exception: Log viewing is disabled on this cluster at org.apache.spark.deploy.worker.ui.LogPage.render(LogPage.scala:65) at org.apache.spark.ui.WebUI$$anonfun$3.apply(WebUI.scala:100) at org.apache.spark.ui.WebUI$$anonfun$3.apply(WebUI.scala:100) at org.apache.spark.ui.JettyUtils$$anon$3.doGet(JettyUtils.scala:90) at javax.servlet.http.HttpServlet.service(HttpServlet.java:687) at javax.servlet.http.HttpServlet.service(HttpServlet.java:790) at org.eclipse.jetty.servlet.ServletHolder.handle(ServletHolder.java:848) at org.eclipse.jetty.servlet.ServletHandler.doHandle(ServletHandler.java:584) at org.eclipse.jetty.server.handler.ContextHandler.doHandle(ContextHandler.java:1180) at org.eclipse.jetty.servlet.ServletHandler.doScope(ServletHandler.java:512) at org.eclipse.jetty.server.handler.ContextHandler.doScope(ContextHandler.java:1112) at org.eclipse.jetty.server.handler.ScopedHandler.handle(ScopedHandler.java:141) at org.eclipse.jetty.server.handler.gzip.GzipHandler.handle(GzipHandler.java:493) at org.eclipse.jetty.server.handler.ContextHandlerCollection.handle(ContextHandlerCollection.java:213) at org.eclipse.jetty.server.handler.HandlerWrapper.handle(HandlerWrapper.java:134) at org.eclipse.jetty.server.Server.handle(Server.java:534) at org.eclipse.jetty.server.HttpChannel.handle(HttpChannel.java:320) at org.eclipse.jetty.server.HttpConnection.onFillable(HttpConnection.java:251)

Cause

This specific exception is controlled by the spark.databricks.ui.logViewingEnabled Spark property. When this value is set to false, log viewing is disabled. When Spark log viewing is disabled on the cluster, the Spark UI generates an error when you attempt to view the logs.

The spark.databricks.ui.logViewingEnabled property defaults to true, however sometimes other Spark configurations (such as spark.databricks.acl.dfAclsEnabled) can alter its value and set it to false.

Solution

Set spark.databricks.ui.logViewingEnabled to true in the cluster's Spark config (AWS | Azure | GCP).

spark.databricks.ui.logViewingEnabled true

This restores the default configuration in case it is accidentally overwritten.