Problem

You are trying to create a dataset using a schema that contains Scala enumeration fields (classes and objects). When you run your code in a notebook cell, you get a ClassNotFoundException error.

Sample code

%scala

object TestEnum extends Enumeration {

type TestEnum = Value

val E1, E2, E3 = Value

}

import spark.implicits._

import TestEnum._

case class TestClass(i: Int, e: TestEnum) {}

val ds = Seq(TestClass(1, TestEnum.E1)).toDSError message

ClassNotFoundException: lineb3e041f628634740961b78d5621550d929.$read$$iw$$iw$$iw$$iw$$iw$$iw$TestEnum

Cause

The ClassNotFoundException error occurred because the sample code does not define the class and object in a package cell.

Solution

If you want to use custom Scala classes and objects defined within notebooks (in Apache Spark and across notebook sessions) you must define the class and object inside a package and import the package into your notebook.

Define the class and object

This sample code starts off by creating the package com.databricks.example. It then defines the object TestEnum and assigns values, before defining the class TestClass.

%scala

package com.databricks.example // Create a package.

object TestEnum extends Enumeration { // Define an object called TestEnum.

type TestEnum = Value

val E1, E2, E3 = Value // Enum values

}

case class TestClass(i: Int, other:TestEnum.Value) // Define a class called TestClass.Import the package

After the class and object have been defined, you can import the package you created into a notebook and use both the class and the object.

This sample code starts by importing the com.databricks.example package that we just defined.

It then evaluates a DataFrame using the TestClass class and TestEnum object. Both are defined in the com.databricks.example package.

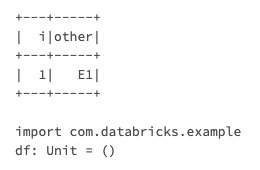

%scala import com.databricks.example val df = sc.parallelize(Array(example.TestClass(1,(example.TestEnum.E1)))).toDS().show()

The DataFrame successfully displays after the sample code is run.

Please review the package cells (AWS | Azure | GCP) documentation for more information.