Problem

You are trying to cast a value of one or greater as a DECIMAL using equal values for both precision and scale. A null value is returned instead of the expected value.

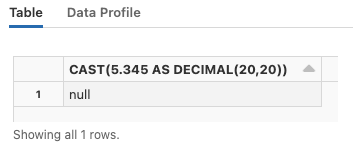

This sample code:

%sql SELECT CAST (5.345 AS DECIMAL(20,20))

Returns:

Cause

The DECIMAL type (AWS | Azure | GCP) is declared as DECIMAL(precision, scale), where precision and scale are optional.

Precision represents the total number of digits in the value of the variable. This includes the whole number part and the fractional part.

The scale represents the number of fractional digits in the value of the variable. Put simply, this is the number of digits to the right of the decimal point.

For example, the number 123.45 has a precision of five (as there are five total digits) and a scale of two (as only two digits are on the right-hand side of the decimal point).

When the precision and scale are equal, it means the value is less than one, as all digits are used for the fractional part of the number. For example, DECIMAL(20, 20) defines a value with 20 digits and 20 digits to the right of the decimal point. All 20 digits are used to represent the fractional part of the number, with no digits used for the whole number.

If the precision in the value overflows the precision defined in the datatype declaration, null is returned instead of the fractional decimal value.

Solution

Set spark.sql.ansi.enabled to true in your cluster's Spark config (AWS | Azure | GCP).

This enables Spark SQL ANSI compliance.

For more information, review the ANSI compliance documentation.

Once ANSI compliance is enabled, passing incorrect precision and scale values returns an error indicating the correct value.

For example, this sample code:

%sql SELECT CAST (5.345 AS DECIMAL(20,20))

Returns this error message:

Error in SQL statement: SparkArithmeticException: [CANNOT_CHANGE_DECIMAL_PRECISION] Decimal(expanded, 5.345, 4, 3) cannot be represented as Decimal(20, 20).

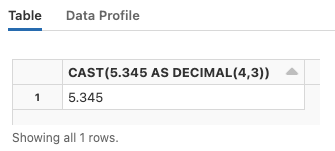

However, this sample code:

SELECT CAST (5.345 AS DECIMAL(4,3))

Returns the expected result: