Problem

You are trying to install Cartopy on a cluster and you receive a ManagedLibraryInstallFailed error message.

java.lang.RuntimeException: ManagedLibraryInstallFailed: org.apache.spark.SparkException: Process List(/databricks/python/bin/pip, install, cartopy==0.17.0, --disable-pip-version-check) exited with code 1. ERROR: Command errored out with exit status 1: command: /databricks/python3/bin/python3.7 /databricks/python3/lib/python3.7/site-packages/pip/_vendor/pep517/_in_process.py get_requires_for_build_wheel /tmp/tmpjoliwaky cwd: /tmp/pip-install-t324easa/cartopy Complete output (3 lines): setup.py:171: UserWarning: Unable to determine GEOS version. Ensure you have 3.3.3 or later installed, or installation may fail. '.'.join(str(v) for v in GEOS_MIN_VERSION), )) Proj 4.9.0 must be installed. ---------------------------------------- ERROR: Command errored out with exit status 1: /databricks/python3/bin/python3.7 /databricks/python3/lib/python3.7/site-packages/pip/_vendor/pep517/_in_process.py get_requires_for_build_wheel /tmp/tmpjoliwaky Check the logs for full command output. for library:PythonPyPiPkgId(cartopy,Some(0.17.0),None,List()),isSharedLibrary=false

Cause

Cartopy has dependencies on libgeos 3.3.3 and above and libproj 4.9.0. If libgeos and libproj are not installed, Cartopy fails to install.

Solution

Configure a cluster-scoped init script (AWS | Azure | GCP) to automatically install Cartopy and the required dependencies.

- Create the base directory to store the init script in, if the base directory does not exist. Here, use dbfs:/databricks/<directory>as an example.

%sh dbutils.fs.mkdirs("dbfs:/databricks/<directory>/") - Create the script and save it to a file.

%sh dbutils.fs.put("dbfs:/databricks/<directory>/cartopy.sh",""" #!/bin/bash sudo apt-get install libgeos++-dev -y sudo apt-get install libproj-dev -y /databricks/python/bin/pip install Cartopy """,True) - Check that the script exists.

%python display(dbutils.fs.ls("dbfs:/databricks/<directory>/cartopy.sh")) - On the cluster configuration page, click the Advanced Options toggle.

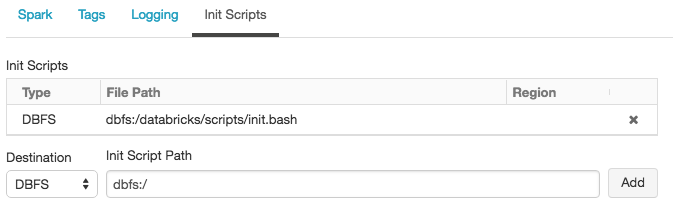

- At the bottom of the page, click the Init Scripts tab.

- In the Destination drop-down, select DBFS, provide the file path to the script, and click Add.

- Restart the cluster.