Problem

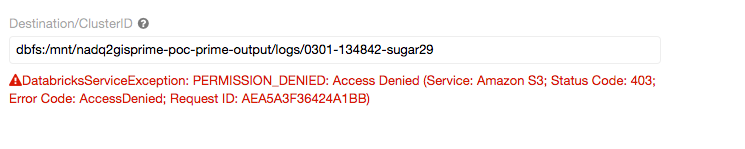

When you try to write log files to an S3 bucket, you get the error:

com.amazonaws.services.s3.model.AmazonS3Exception: Access Denied (Service: Amazon S3; Status Code: 403; Error Code: AccessDenied; Request ID: 2F8D8A07CD8817EA), S3 Extended Request ID:

Cause

The DBFS mount is in an S3 bucket that assumes roles and uses sse-kms encryption. The assumed role has full S3 access to the location where you are trying to save the log file. The location also can access the kms key.

However, access is denied because the logging daemon isn’t inside the container on the host machine. Only the container on the host machine has access to the Apache Spark configuration that assumes the role.

Solution

Use the cluster IAM Role to deliver the logs. Configure the logs to deliver to an S3 bucket in the AWS account for the Databricks data plane VPC (your customer Databricks account).

Review the Cluster Log Delivery documentation for more information.