Problem

You have long-running MLflow tasks in your notebook or job and the tasks are not completed. Instead, they return a (403) Invalid access token error message.

Error stack trace: MlflowException: API request to endpoint /api/2.0/mlflow/runs/create failed with error code 403 != 200. Response body: '<html>

<head>

<meta data-fr-http-equiv="Content-Type" content="text/html;charset=utf-8"/>

<title>Error 403 Invalid access token.</title>

</head>

<body><h2>HTTP ERROR 403</h2>

<p>Problem accessing /api/2.0/mlflow/runs/create. Reason:

<pre> Invalid access token.</pre></p>

</body>

</html>

Cause

The Databricks access token that the MLflow Python client uses to communicate with the tracking server expires after several hours. If your ML tasks run for an extended period of time, the access token may expire before the task completes. This results in MLflow calls failing with a (403) Invalid access token error message in both notebooks and jobs.

Solution

You can work around this issue by manually creating an access token with an extended lifetime and then configuring that access token in your notebook prior to running MLflow tasks.

- Generate a personal access token (AWS | Azure ) and configure it with an extended lifetime.

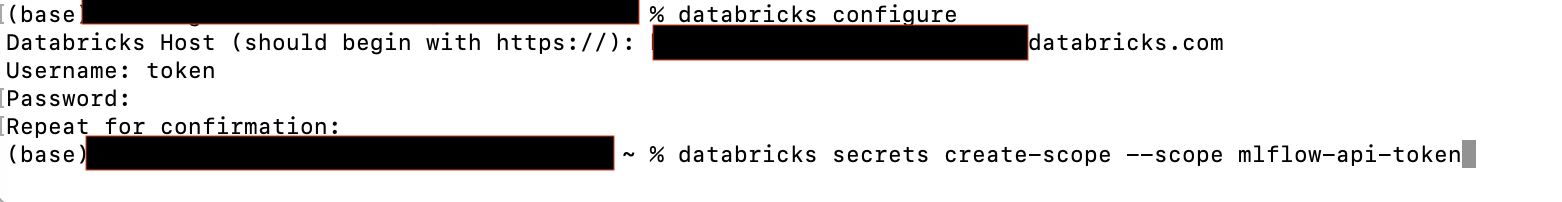

- Set up the Databricks CLI (AWS | Azure).

- Use the Databricks CLI to create a new secret with the personal access token you just created.

databricks secrets put --scope {<secret-name>} --key mlflow-access-token --string-value {<personal-access-token>} - Insert this sample code at the beginning of your notebook. Include your secret name and your Workspace URL (AWS | Azure).

%python access_token = dbutils.secrets.get(scope="{<secret-name>}", key="mlflow-access-token") import os os.environ["DATABRICKS_TOKEN"] = access_token os.environ["DATABRICKS_HOST"] = "https://<workspace-url>" from databricks_cli.configure import provider config_provider = provider.EnvironmentVariableConfigProvider() provider.set_config_provider(config_provider) - Run your notebook or job as normal.