Problem

The cluster returns Cancelled in a Python notebook. Inspect the driver log (std.err) in the Cluster Configuration page for a stack trace and error message similar to the following:

log4j:WARN No appenders could be found for logger (com.databricks.conf.trusted.ProjectConf$). log4j:WARN Please initialize the log4j system properly. log4j:WARN See https://logging.apache.org/log4j/1.2/faq.html#noconfig for more info. OpenJDK 64-Bit Server VM warning: ignoring option MaxPermSize=512m; support was removed in 8.0 Traceback (most recent call last): File "/local_disk0/tmp/1551693540856-0/PythonShell.py", line 30, in <module> from IPython.nbconvert.filters.ansi import ansi2html File "/databricks/python/lib/python3.5/site-packages/IPython/nbconvert/__init__.py", line 6, in <module> from . import postprocessors File "/databricks/python/lib/python3.5/site-packages/IPython/nbconvert/postprocessors/__init__.py", line 6, in <module> from .serve import ServePostProcessor File "/databricks/python/lib/python3.5/site-packages/IPython/nbconvert/postprocessors/serve.py", line 29, in <module> class ProxyHandler(web.RequestHandler): File "/databricks/python/lib/python3.5/site-packages/IPython/nbconvert/postprocessors/serve.py", line 31, in ProxyHandler @web.asynchronous AttributeError: module 'tornado.web' has no attribute 'asynchronous'

Cause

When you install the bokeh library, by default tornado version 6.0a1 is installed, which is an alpha release. The alpha release causes this error, so the solution is to revert to the stable version of tornado.

Solution

Follow the steps below to create a cluster-scoped init script (AWS | Azure | GCP). The init script removes the newer version of tornado and installs the stable version.

- If the init script does not already exist, create a base directory to store it:

%sh dbutils.fs.mkdirs("dbfs:/databricks/<directory>/") - Create the following script:

%sh dbutils.fs.put("dbfs:/databricks/<directory>/tornado.sh",""" #!/bin/bash pip uninstall --yes tornado rm -rf /home/ubuntu/databricks/python/lib/python3.5/site-packages/tornado* rm -rf /databricks/python/lib/python3.5/site-packages/tornado* /usr/bin/yes | /home/ubuntu/databricks/python/bin/pip install tornado==5.1.1 """,True) - Confirm that the script exists:

%sh display(dbutils.fs.ls("dbfs:/databricks/<directory>/tornado.sh")) - Go to the cluster configuration page (AWS | Azure | GCP) and click the Advanced Options toggle.

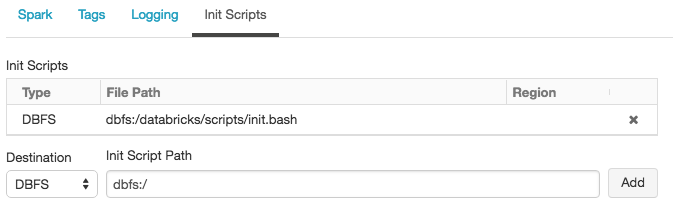

- At the bottom of the page, click the Init Scripts tab:

- In the Destination drop-down, select DBFS, provide the file path to the script, and click Add.

- Restart the cluster.

For more information, see: