Speculative execution

Speculative execution can be used to automatically re-attempt a task that is not making progress compared to other tasks in the same stage.

This means if one or more tasks are running slower in a stage, they will be re-launched. The task that completes first is marked as successful. The other attempt gets killed.

Implementation

When a job hangs intermittently and one or more tasks are hanging, enabling speculative execution is often the first step to resolving the issue. As a result of speculative execution, the slow hanging task that is not progressing is re-attempted in another node.

It means that if one or more tasks are running slower in a stage, the tasks are relaunched. Upon successful completion of the relaunched task, the original task is marked as failed. If the original task completes before the relaunched task, the original task attempt is marked as successful and the relaunched task is killed.

In addition to the speculative execution, there are a few additional settings that can be tweaked as needed. Speculative execution should only be enabled when necessary.

Below are the major configuration options for speculative execution.

Configuration |

Description |

Databricks Default |

OSS Default |

spark.speculation |

If set to true, performs speculative execution of tasks. This means if one or more tasks are running slowly in a stage, they will be re-launched. | false |

false |

spark.speculation.interval |

How often Spark will check for tasks to speculate. | 100ms |

100ms |

spark.speculation.multiplier |

How many times slower a task is than the median to be considered for speculation. | 3 |

1.5 |

spark.speculation.quantile |

Fraction of tasks which must be complete before speculation is enabled for a particular stage | 0.9 |

0.75 |

How to interpret the Databricks default values

If speculative execution is enabled (spark.speculation), then every 100 ms (spark.speculation.interval), Apache Spark checks for slow running tasks. A task is marked as a slow running task if it is running more than three times longer (spark.speculation.multiplier) the median execution time of completed tasks. Spark waits until 90% (spark.speculation.quantile) of the tasks have been completed before starting speculative execution.

Identifying speculative execution in action

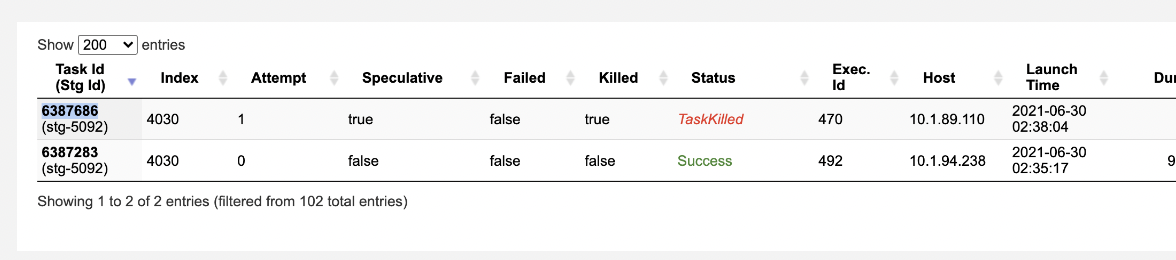

- Review the task attempts in the Spark UI. If speculative execution is running, you see one task with the Status as Success and the other task with a Status of TaskKilled.

- Speculative execution will not always start, even though there are slow tasks. This is because the criteria for speculative execution must be met before it starts running. This typically happens on stages with a small number of tasks, with only one or two tasks getting stuck. If the spark.speculation.quantile is not met, speculative execution does not start.

When to enable speculative execution

- Speculative execution can be used to unblock a Spark application when a few tasks are running for longer than expected and the cause is undetermined. Once a root cause is determined, you should resolve the underlying issue and disable speculative execution.

- Speculative execution ensures that the speculated tasks are not scheduled on the same executor as the original task. This means that issues caused by a bad VM instance are easily mitigated by enabling speculative execution.

When not to run speculative execution

- Speculative execution should not be used for a long time period on production jobs for a long time period. Extended use can result in failed tasks.

- If the operations performed in the task are not idempotent, speculative execution should not be enabled.

- If you have data skew, the speculated task can take as long as the original task, leaving the original task to succeed and the speculated task to get killed. Speculative execution does not guaranteed the speculated task will finish first.

- Enabling speculative execution can impact performance so it should only be used for troubleshooting. If you require speculative execution to complete your workloads, open a Databricks support request. Databricks support can help determine the root cause of the task slowness.