Problem

You perform an inner join, but the resulting joined table is missing data.

For example, assume you have two tables, orders and models.

%python

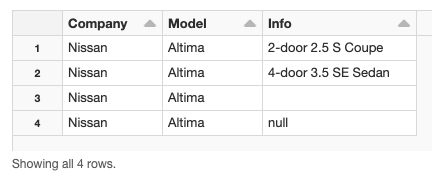

df_orders = spark.createDataFrame([('Nissan','Altima','2-door 2.5 S Coupe'), ('Nissan','Altima','4-door 3.5 SE Sedan'), ('Nissan','Altima',''), ('Nissan','Altima', None)], ["Company", "Model", "Info"])

%python

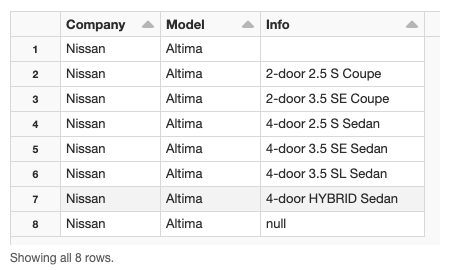

df_models = spark.createDataFrame([('Nissan','Altima',''), ('Nissan','Altima','2-door 2.5 S Coupe'), ('Nissan','Altima','2-door 3.5 SE Coupe'), ('Nissan','Altima','4-door 2.5 S Sedan'), ('Nissan','Altima','4-door 3.5 SE Sedan'), ('Nissan','Altima','4-door 3.5 SL Sedan'), ('Nissan','Altima','4-door HYBRID Sedan'), ('Nissan','Altima',None)], ["Company", "Model", "Info"])

You attempt a straight join of the two tables.

%python

df_orders.createOrReplaceTempView("Orders")

df_models.createOrReplaceTempView("Models")

SQL

Copy to clipboardCopy

SELECT *

MAGIC FROM Orders a

MAGIC INNER JOIN Models b

MAGIC ON a.Company = b.Company

MAGIC AND a.Model = b.Model

MAGIC AND a.Info = b.InfoThe resulting joined table only includes three of the four records from the orders table. The record with a null value in a column does not appear in the results.

Cause

Apache Spark does not consider null values when performing a join operation.

If you attempt to join tables, and some of the columns contain null values, the null records will not be included in the resulting joined table.

Solution

If your source tables contain null values, you should use the Spark null safe operator (<=>).

When you use <=> Spark processes null values (instead of dropping them) when performing a join.

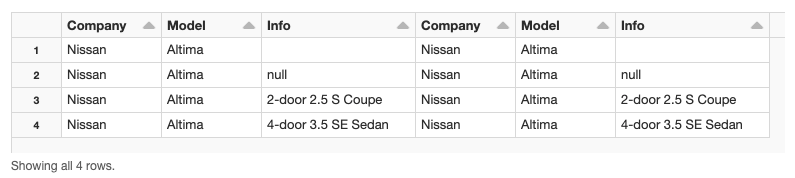

For example, if we modify the sample code with <=>, the resulting table does not drop the null values.

%sql SELECT * MAGIC FROM Orders a MAGIC INNER JOIN Models b MAGIC ON a.Company = b.Company MAGIC AND a.Model = b.Model MAGIC AND a.Info <=> b.Info

Example notebook

Review the Inner join drops null values example notebook.