Problem

You are trying to use Japanese characters in your tables, but keep getting errors.

Create a table with the OPTIONS keyword

OPTIONS provides extra metadata to the table. You try creating a table with OPTIONS and specify the charset as utf8mb4.

%sql

CREATE TABLE default.JPN_COLUMN_NAMES('作成年月' string

,'計上年月' string

,'所属コード' string

,'生保代理店コード_8桁' string

,'所属名' string

)

using csv OPTIONS (path "/mnt/tabledata/testdata/", header "true", delimiter ",", inferSchema "false", ignoreLeadingWhiteSpace "false", ignoreTrailingWhiteSpace "false", multiLine "true", escape "\"" , charset "utf8mb4");The result is an error.

Error in SQL statement: AnalysisException: org.apache.hadoop.hive.ql.metadata.HiveException: MetaException(message:javax.jdo.JDODataStoreException: Put request failed : INSERT INTO TABLE_PARAMS (PARAM_VALUE,TBL_ID,PARAM_KEY) VALUES (?,?,?)

Create a table without the OPTIONS keyword

You try to create a table without using OPTIONS.

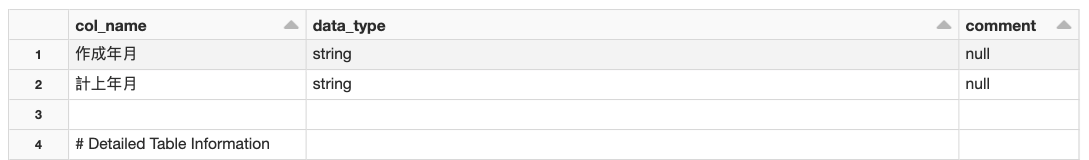

%sql CREATE TABLE test.JPN_COLUMN_NAMES (`作成年月` string ,`計上年月` string) USING csv describe extended test.JPN_COLUMN_NAMES;

The table appears to be created, but the column names are shown as ???? instead of using the specified Japanese characters.

Create a table with Hive table expression

You try creating a Hive format table and specify the charset as utf8mb4.

%sql CREATE TABLE test.JPN_COLUMN_NAMES (`作成年月` string ,`計上年月` string) ROW FORMAT SERDE "org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe" WITH SERDEPROPERTIES ( "separatorChar" = ",", "quoteChar" = "\"", "escapeChar" = "\\", "serialization.encoding"='utf8mb4') TBLPROPERTIES ( 'store.charset'='utf8mb4', 'retrieve.charset'='utf8mb4');

The result is an error.

Caused by: java.sql.SQLException: Incorrect string value: '\xE4\xBD\x9C\xE6\x88\x90...' for column 'COLUMN_NAME' at row 1 Query is: INSERT INTO COLUMNS_V2 (CD_ID,COMMENT,`COLUMN_NAME`,TYPE_NAME,INTEGER_IDX) VALUES (6544,<null>,'作成年月','string',0)

Cause

When a table is created, an entry is updated in the Hive metastore. The Hive metastore is typically a MySQL database.

When a new table is created, the names of the columns are inserted into the TABLE_PARAMS of the metastore.

The charset collation of PARAM_VALUE from TABLE_PARAMS is latin1_bin as collation and the charset is latin1.

%scala

executeQuery("""SELECT TABLE_SCHEMA , TABLE_NAME , COLUMN_NAME , COLLATION_NAME FROM INFORMATION_SCHEMA.COLUMNS WHERE TABLE_NAME = 'TABLE_PARAMS' """)

Solution

latin1 does not have support for Japanese characters, but UTF-8 does.

You need to use an external metastore with UTF-8_bin as collation and the charset as UTF-8.

Any MySQL database 5.6 or above can be used as a Hive metastore.

For this example, we are using MySQL 8.0.13-4.

- Create an external Apache Hive metastore (AWS | Azure | GCP).

- Create a database to instantiate the new metastore with default tables.

%sql create database <database_name>

- The newly created tables can be explored in the external database objects browser or by using the show tables command.

%sql -- Run in the metastore database. show tables in <database_name>

- Check the collation information in MySQL at the table level.

%sql SELECT TABLE_COLLATION,TABLE_NAME,TABLE_TYPE,TABLE_COLLATION FROM INFORMATION_SCHEMA.TABLES where TABLE_TYPE like 'BASE%'

- Check the collation information in MySQL at the column level.

%sql SELECT TABLE_SCHEMA , TABLE_NAME , COLUMN_NAME , COLLATION_NAME FROM INFORMATION_SCHEMA.COLUMNS

- Change the charset from latin1 to UTF-8.

%sql -- Run in the metastore database. All queries are compatible with MySQL. -- Change collation and charset across the database. ALTER DATABASE <database_name> CHARACTER SET utf8 COLLATE utf8_bin; -- Change collation and charset per table. ALTER TABLE <table_name> CONVERT TO CHARACTER SET utf8 COLLATE utf8_bin; -- Change collation and charset at the column level. ALTER TABLE <table_name> MODIFY <column_name> <datatype> CHARACTER SET utf8 COLLATE utf8_bin;

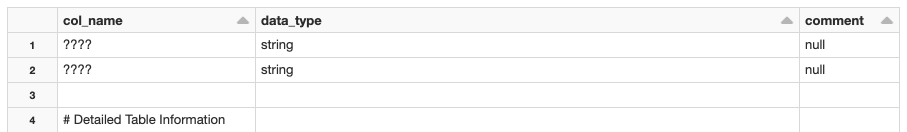

- You can now correctly view Japanese characters when you display the table.