Problem

The cluster returns Cancelled in a Python notebook. Notebooks in all other languages execute successfully on the same cluster.

Cause

When you install a conflicting version of a library, such as ipython, ipywidgets, numpy, scipy, or pandas to the PYTHONPATH, then the Python REPL can break, causing all commands to return Cancelled after 30 seconds. This also breaks %sh, the notebook macro that lets you enter shell scripts in Python notebook cells.

Solution

To solve this problem, do the following:

- Identify the conflicting library and uninstall it.

- Install the correct version of the library in a notebook or with a cluster-scoped init script.

Identify the conflicting library

- Uninstall each library one at a time, and check if the Python REPL still breaks.

- If the REPL still breaks, reinstall the library you removed and remove the next one.

- When you find the library that causes the REPL to break, install the correct version of that library using one of the two methods below.

You can also inspect the driver log (std.err) for the cluster (on the Cluster Configuration page) for a stack trace and error message that can help identify the library conflict.

Install the correct library

Do one of the following.

Option 1: Install in a notebook using pip3

%sh sudo apt-get -y install python3-pip pip3 install <library-name>

Option 2: Install using a cluster-scoped init script

Follow the steps below to create a cluster-scoped init script (AWS | Azure | GCP) that installs the correct version of the library. Replace <library-name> in the examples with the filename of the library to install.

- If the init script does not already exist, create a base directory to store it:

%sh dbutils.fs.mkdirs("dbfs:/databricks/<directory>/") - Create the following script:

%sh dbutils.fs.put("/databricks/init/cluster-name/<library-name>.sh",""" #!/bin/bash sudo apt-get -y install python3-pip sudo pip3 install <library-name> """, True) - Confirm that the script exists:

%sh display(dbutils.fs.ls("dbfs:/databricks/<directory>/<library-name>.sh")) - Go to the cluster configuration page (AWS | Azure | GCP) and click the Advanced Options toggle.

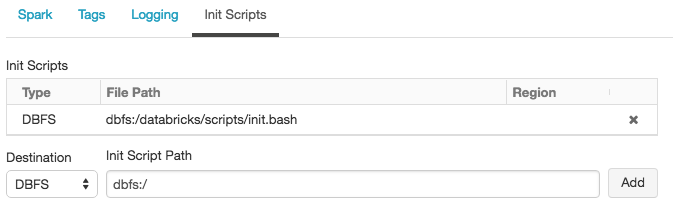

- At the bottom of the page, click the Init Scripts tab:

- In the Destination drop-down, select DBFS, provide the file path to the script, and click Add.

- Restart the cluster.