This article explains how to use SSH to connect to an Apache Spark driver node for advanced troubleshooting and installing custom software.

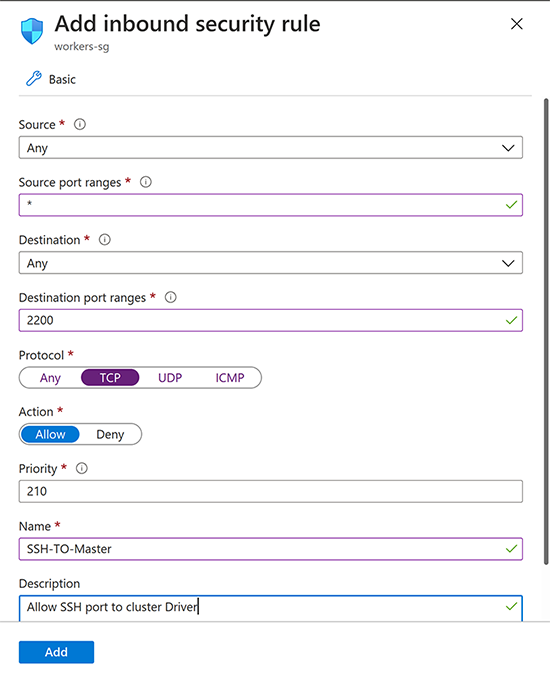

Configure an Azure network security group

The network security group associated with your VNet must allow SSH traffic. The default port for SSH is 2200. If you are using a custom port, you should make note of it before proceeding. You also have to identify a traffic source. This can be a single IP address, or it can be an IP range that represents your entire office.

- In the Azure portal, find the network security group. The network security group name can be found in the public subnet.

- Edit the inbound security rules to allow connections to the SSH port. In this example, we are using the default port.

Generate SSH key pair

- Open a local terminal.

- Create an SSH key pair by running this command:

ssh-keygen -t rsa -b 4096 -C

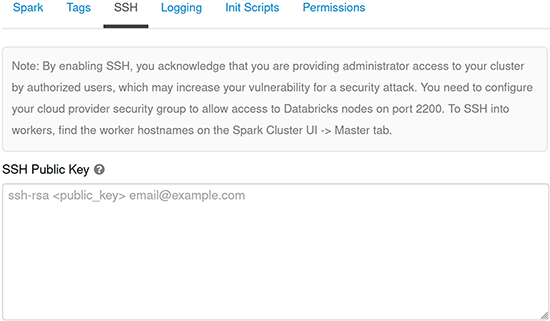

Configure a new cluster with your public key

- Copy the ENTIRE contents of the public key file.

- Open the cluster configuration page.

- Click Advanced Options.

- Click the SSH tab.

- Paste the ENTIRE contents of the public key into the Public key field.

- Continue with cluster configuration as normal.

Configure an existing cluster with your public key

If you have an existing cluster and did not provide the public key during cluster creation, you can inject the public key from a notebook.

- Open any notebook that is attached to the cluster.

- Copy the following code into the notebook, updating it with your public key as noted:

%scala val publicKey = "<put your public key here>" def addAuthorizedPublicKey(key: String): Unit = { val fw = new java.io.FileWriter("/home/ubuntu/.ssh/authorized_keys", /* append */ true) fw.write("\n" + key) fw.close() } addAuthorizedPublicKey(publicKey) - Run the code block to inject the public key.

SSH into the Spark driver

- Open the cluster configuration page.

- Click Advanced Options.

- Click the SSH tab.

- Note the Driver Hostname.

- Open a local terminal.

- Run the following command, replacing the hostname and private key file path:

ssh ubuntu@<hostname> -p 2200 -i <private-key-file-path>