Problem

No Spark jobs start, and the driver logs contain the following error:

Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

Cause

This error can occur when the executor memory and number of executor cores are set explicitly on the Spark Config tab.

Here is a sample config:

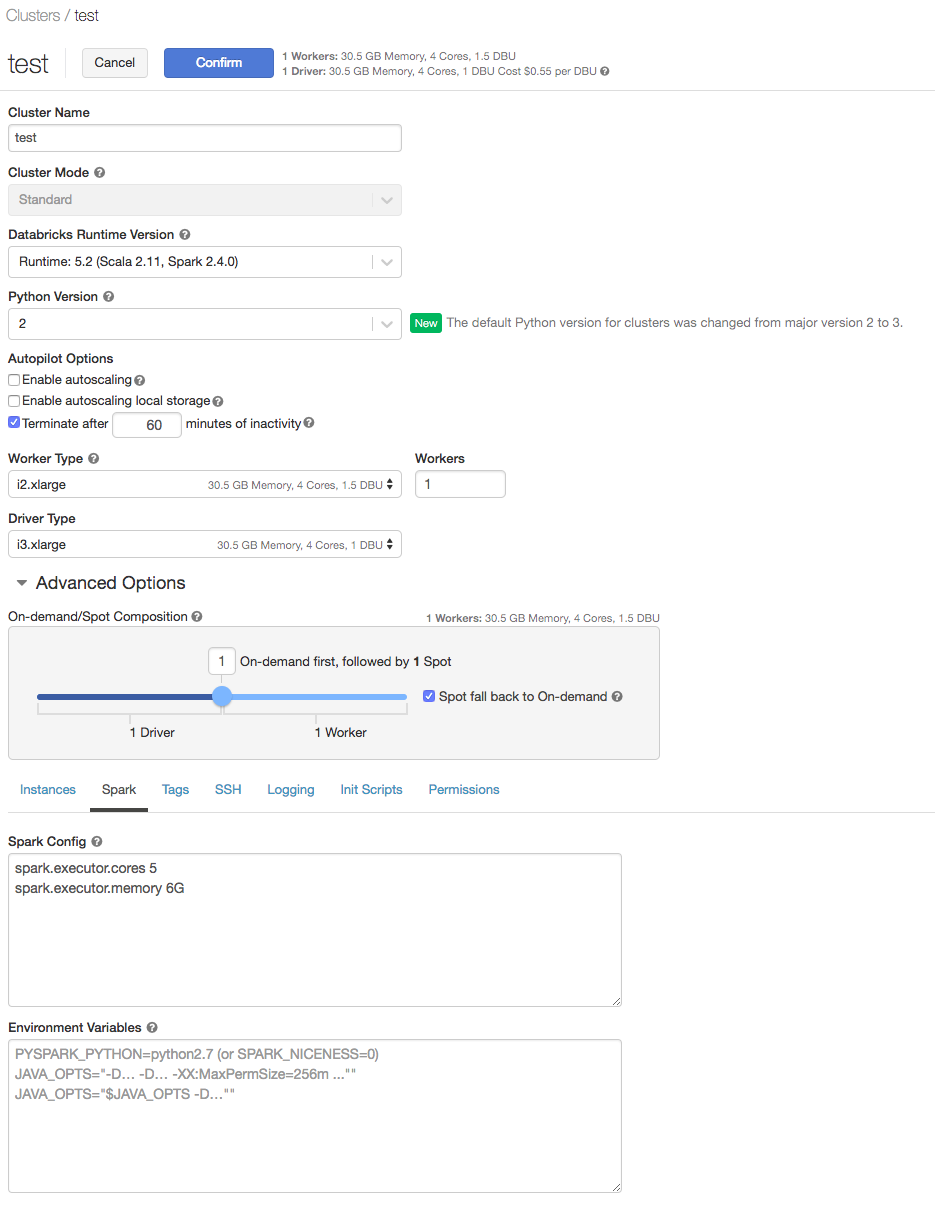

AWS

In this example, the executor is set to a i3.xLarge node, and the Spark Config is set to:

spark.executor.cores 5 spark.executor.memory 6G

The i3.xLarge cluster type only has 4 cores but a user has set 5 cores per executor explicitly. Spark does not start any tasks, and enters the following error messages into the driver logs:

WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resourcesDelete

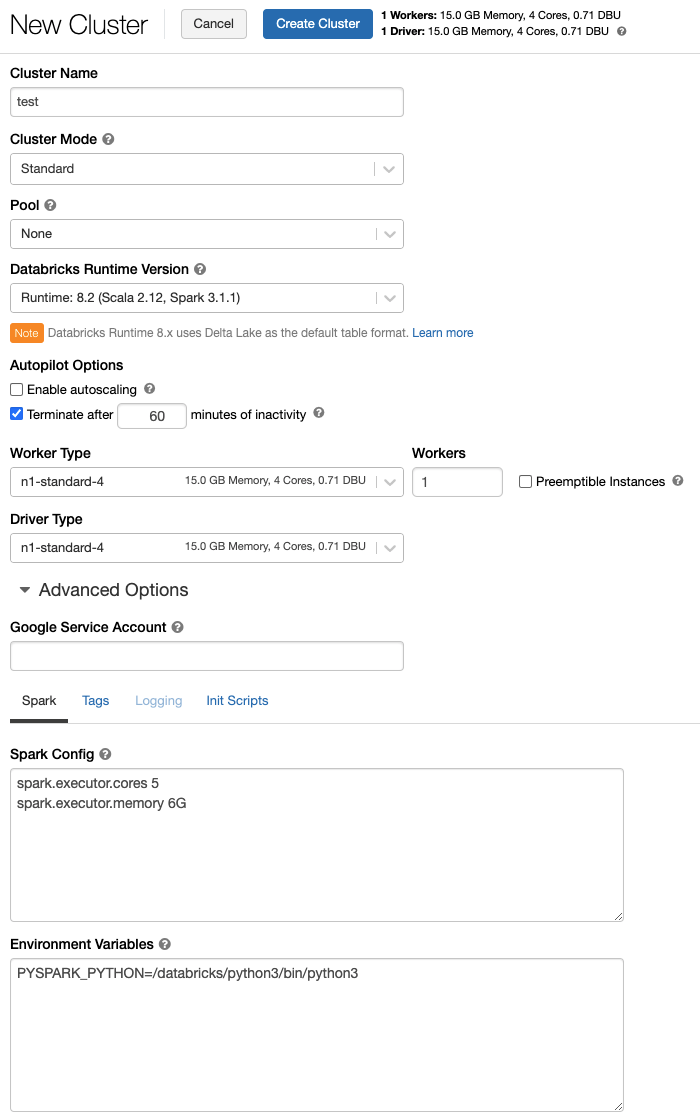

GCP

In this example, the executor is set to a n1-standard-4 node, and the Spark Config is set to:

spark.executor.cores 5 spark.executor.memory 6G

The n1-standard-4 cluster type only has 4 cores but a user has set 5 cores per executor explicitly. Spark does not start any tasks, and enters the following error messages into the driver logs:

WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resourcesDelete

Solution

You should never specify cores greater than the available number of cores on the node that you chose for a cluster.