On Dec 1, 2023, Databricks will disable cluster-named init scripts for all workspaces. This type of init script was previously deprecated and will not be usable after Dec 1, 2023. Cluster-named init scripts were replaced by cluster-scoped init scripts in August 2018. Cluster-scoped init scripts stored as workspace files continue to be supported.

Databricks recommends that you migrate your cluster-named init scripts to cluster-scoped init scripts stored as workspace files as soon as possible.

You can manually migrate cluster-named init scripts to cluster-scoped init scripts (AWS | Azure) by removing them from the reserved DBFS path at /databricks/init/<cluster-name> and storing them as workspace files (AWS | Azure). Once stored as workspace files, you can configure the init scripts as cluster-scoped init scripts. After migrating the init scripts, you should Disable legacy cluster-named init scripts for the workspace (AWS | Azure).

Alternatively, Databricks Engineering has created a notebook to help automate the migration process.

This notebook does the following:

- Cluster-named init scripts in the workspace are migrated to cluster-scoped init scripts stored as workspace files.

- Cluster-named init scripts are disabled in the workspace.

- Cluster-scoped init scripts stored on DBFS in the workspace are migrated to cluster-scoped init scripts stored as workspace files.

Instructions

Prerequisites

You must run this migration notebook on a cluster using Databricks Runtime 11.3 LTS or above.

You should use a bare cluster (no attached init scripts) to run this migration notebook, as the migration process may force a restart of all modified clusters.

Before running the migration notebook, you need to have the scope name and secret name for your Personal Access Token.

For more information, please review the Create a Databricks-backed secret scope (AWS | Azure | GCP) and the Create a secret in a Databricks-backed scope (AWS | Azure | GCP) documentation.

Do a dry run

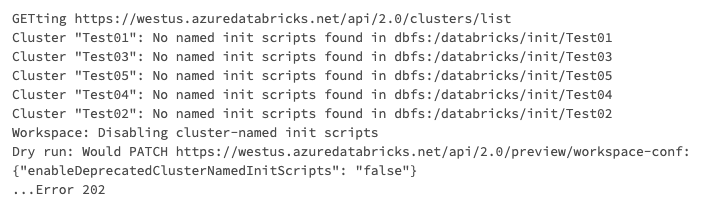

Executing a dry run allows you to test the migration notebook in your workspace without making any changes.

- Download the Migrate cluster-named and cluster-scoped init scripts notebook.

- Import the notebook to your workspace.

- Attach the notebook to a cluster.

- Run the notebook.

- A UI screen appears after you run the notebook, along with a warning that the last command failed. This is normal.

- Ensure Dry Run is set to True and New Location is set to Workspace Files.

- Enter the Scope Name and Secret Name into the appropriate fields.

- Run the notebook.

- The results of the dry run appear in the output at the bottom of the notebook.

Migrate your init scripts

- Run the Migrate cluster-named and cluster-scoped init scripts notebook.

- A UI screen appears after you run the notebook, along with a warning that the last command failed. This is normal.

- In the New Location drop down menu, select Workspace Files.

- Enter the Scope Name and Secret Name into the appropriate fields.

- Start the migration by selecting False in the Dry Run drop down menu.

- The notebook automatically re-runs when the value in Dry Run is changed.

Once the notebook finishes running, all of your cluster-named init scripts are migrated to cluster-scoped init scripts stored as workspace files. All of your cluster-scoped init scripts stored on DBFS are migrated to cluster-scoped init scripts stored as workspace files.

Validate the migrated init scripts

The migrated init scripts are moved to workspace:/init-scripts/<cluster-name>/<original-script-name>.

Cluster-named init scripts

Cluster-named init scripts are configured as cluster-scoped init scripts in the corresponding cluster configuration.

Cluster-named init scripts are disabled across the workspace. They should no longer be used.

Cluster-scoped init scripts

Cluster-scoped init scripts on DBFS are now stored as workspace files. The corresponding cluster configurations are automatically updated.

Permissions

Because workspace files have ACLs, the migrated cluster-scoped init scripts are now owned by the admin who ran the migration notebook.

You must ensure that permissions are correctly set on the migrated cluster-scoped init scripts if you want other users to be able to run and/or edit the init scripts.