On Dec 1, 2023, Databricks will discontinue support of Cluster-scoped init scripts stored as Databricks File System (DBFS) files. Unity Catalog customers should migrate cluster-scoped init scripts stored as DBFS files into Unity Catalog volumes as soon as possible.

Databricks Engineering has created a notebook to help automate the migration process, it migrates cluster-scoped init scripts stored on DBFS to cluster-scoped init scripts stored as Unity Catalog volume files.

Instructions

Prerequisites

- You must own at least one Unity Catalog volume or have CREATE_VOLUME privilege on at least one Unity Catalog schema to migrate DBFS files into Unity Catalog Volumes.

- You must be the metastore admin or have MANAGE_ALLOWLIST permission on the metastore if any init script is installed on Unity Catalog shared mode clusters as those scripts need to be in the metastore artifact allowlist (AWS | Azure). Note this only applies to private preview customers who have cluster scoped init scripts stored in DBFS on shared mode clusters.

- You must run this migration notebook on a cluster using Databricks Runtime 13.3 LTS or above in order to copy files from DBFS into Unity Catalog volumes.

- You should use a bare cluster (no attached init scripts) to run this migration notebook, as the migration process may force a restart of all modified clusters.

- Before running the migration notebook, you need to have the scope name and secret name for your Personal Access Token. For more information, please review the Create a Databricks-backed secret scope (AWS | Azure) and the Create a secret in a Databricks-backed scope (AWS | Azure) documentation.

Do a dry run

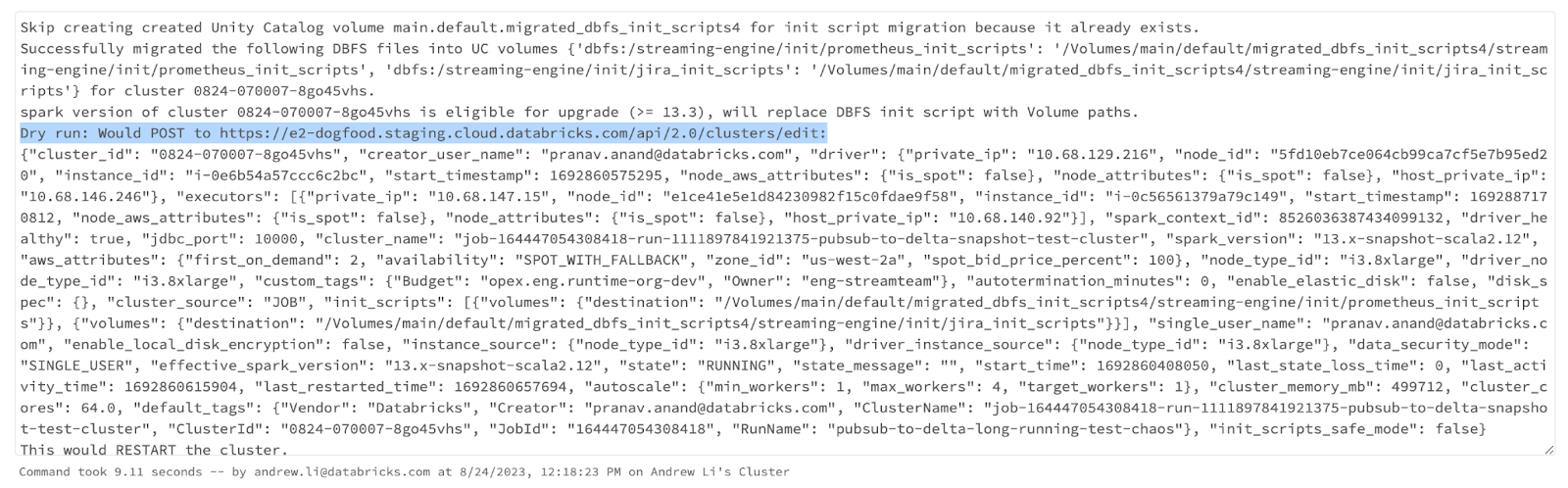

Executing a dry run allows you to test the migration notebook in your workspace. The dry run mode will copy all the cluster scoped init scripts stored on DBFS into the UC volume you specified, but will not actually replace any init scripts on clusters and jobs with the UC volume init scripts.

- Download the Migrate cluster-scoped init scripts from DBFS to Unity Catalog volumes notebook.

- Import the notebook to your workspace.

- Attach the notebook to a cluster running Databricks Runtime 13.3 LTS or above.

- Run the notebook.

- A UI screen appears after you run the notebook, along with a warning that the last command failed. This is normal.

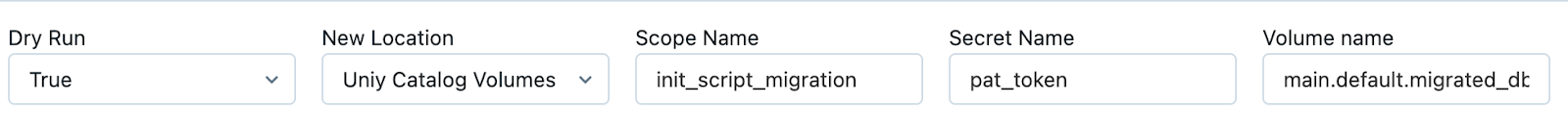

- Ensure Dry Run is set to True.

- Enter the Scope Name and Secret Name into the appropriate fields.

- Enter the volume name which you want to migrate DBFS init scripts into. If the volume does not exist, the script will attempt to create it.

- Run the notebook.

- The results of the dry run appear in the output at the bottom of the notebook. It will tell you what edits it would attempt to make with the clusters and jobs and what init scripts it would attempt to replace with.

Migrate your init scripts

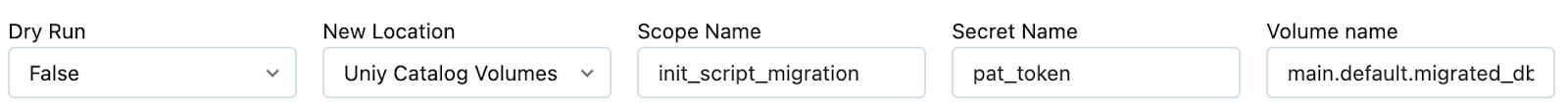

- Start the migration by selecting False in the Dry Run drop down menu.

- The notebook automatically re-runs when the value in Dry Run is changed.

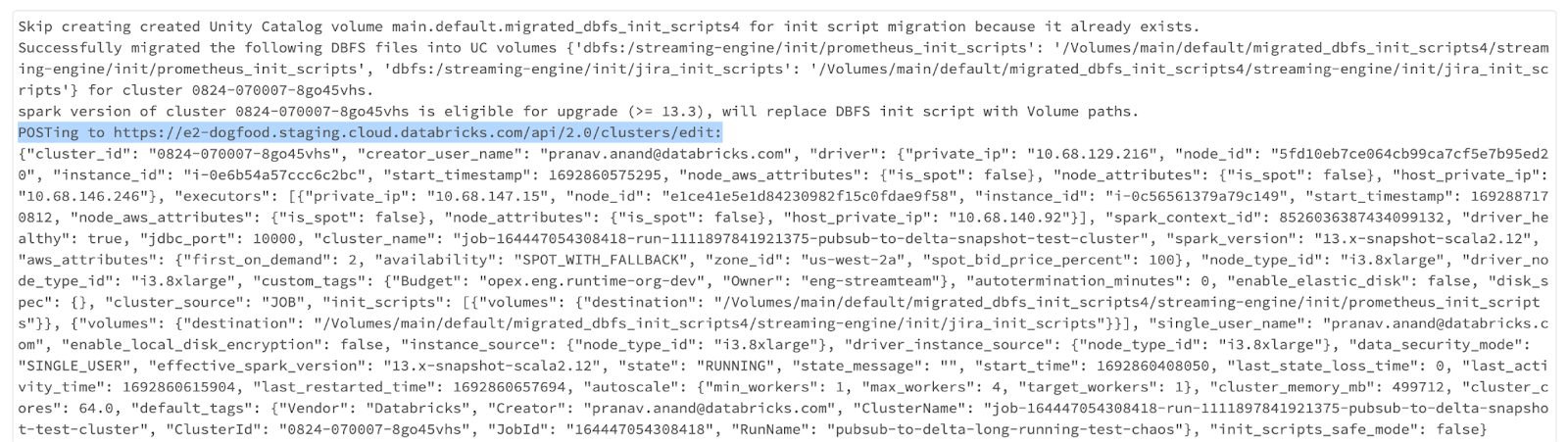

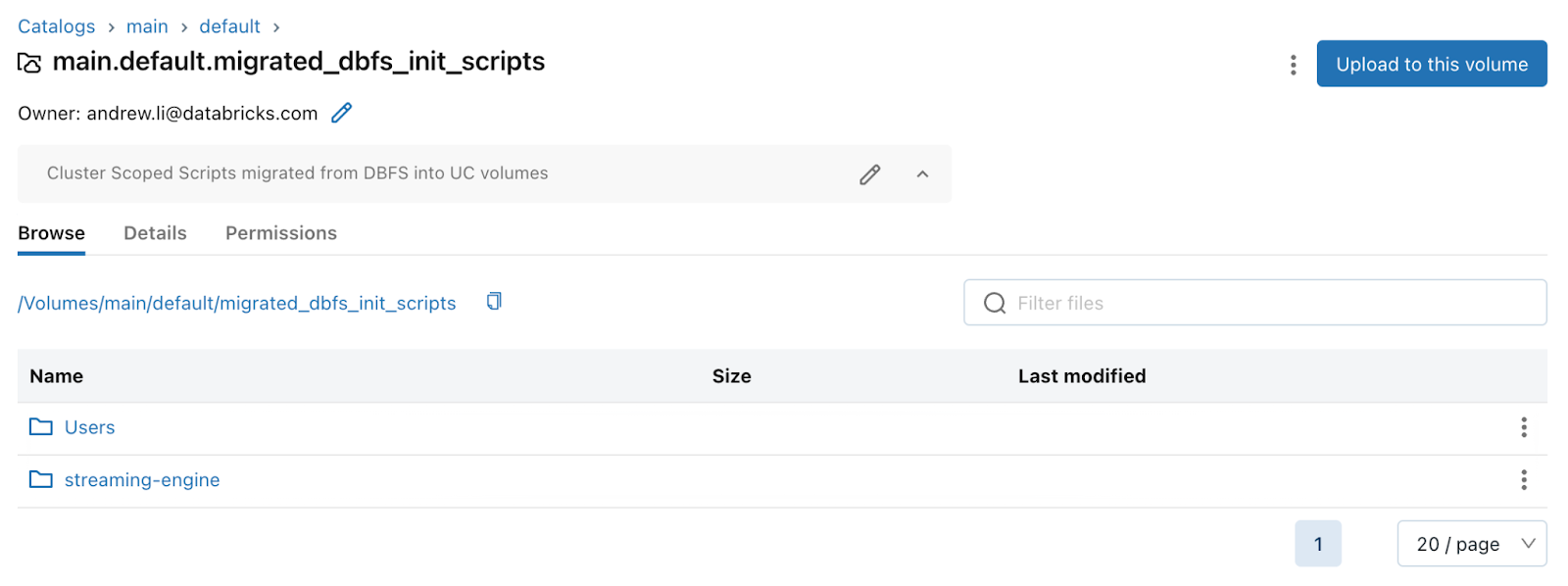

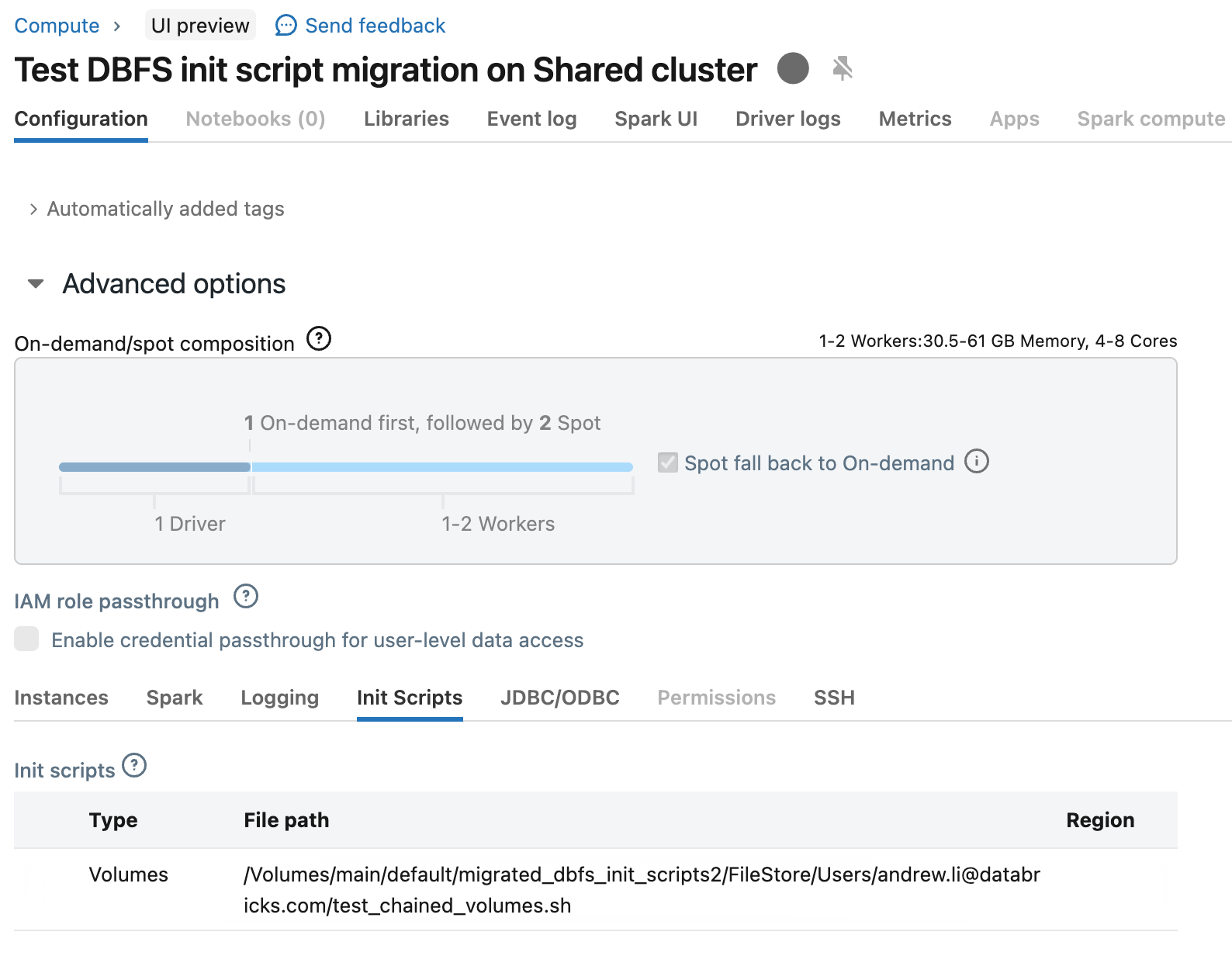

- Once the notebook finishes running, all of your cluster-scoped init scripts stored on DBFS are migrated to cluster-scoped init scripts stored as Unity Catalog volumes, and the script performs its best effort at replacing existing DBFS init scripts attached to clusters and jobs with init scripts stored in Unity Catalog volumes. If any cluster or jobs failed to be migrated, the errors will be displayed in the notebook results.

Validate the migrated init scripts

You should also be able to see the clusters and jobs now having the migrated init scripts pointing to the new Volume path.

Troubleshooting

There are a few possibilities that the migration of init scripts on clusters or jobs can fail. Here’s a list of the potential error messages and how to resolve them.

| Error message | Resolution |

| Failed to list all clusters or jobs in workspace | The current user is not a workspace admin so the script cannot fetch the cluster and job details in order to migrate the init scripts, make sure that you are running as workspace admin for the migration. |

| Failed to create Unity Catalog volume for init script migration | The script failed to create the Unity Catalog volume necessary for migrating DBFS init scripts. Give yourself CREATE_VOLUME permission on the parent schema of the volume you selected, or just select an existing volume which you are an owner of. |

| Failed to update Unity Catalog volume permission with READ_VOLUME for account users. | The script fails to update the volume so that all cluster owners can read the init scripts within it. This is most likely because the current user is not an owner of the volume selected. Please select a volume that the user is an owner of. |

| Failed to allowlist migration UC volume in the metastore artifact allowlist. If you are not a metastore admin, please ask them to grant you MANAGE_ALLOWLIST permission on the metastore, or help add the path into the metastore artifact allowlist. | You have at least one DBFS init script attached on the Unity Catalog shared mode cluster that needs to be migrated. However, you are not metastore admin or do not have the MANAGE_ALLOWLIST permission on the metastore, so you cannot add the new volume paths into the metastore artifact allowlist for it to be usable on Unity Catalog shared mode clusters. Please either ask the metastore admin to add the volume path into the allowlist following instructions in metastore artifact allowlist (AWS | Azure), or ask for MANAGE_ALLOWLIST permission and do it yourself. |

| Cluster with access mode is not eligible for Unity Catalog volumes. | Only clusters with access mode “ASSIGNED (SINGLE USER)” or “SHARED (USER_ISOLATION)” can use Unity Catalog volumes as init script sources. If you are using DBFS init scripts on non UC clusters, please follow this article to migrate the init scripts into workspace files. |

| Spark version of cluster is not eligible for Unity Catalog volume based init script (requires >= 13.3 but actual version is _). OR Cluster is using a custom Databricks runtime image which is not eligible for Unity Catalog volume based init script. |

Your cluster or job is using Databricks Runtime 13.2 and below or a custom image provided by Databricks engineers which do not support Unity Catalog Volumes based init scripts. For SHARED mode clusters, the only resolution is to upgrade to Databricks Runtime 13.3 and above. For ASSIGNED mode clusters, you can also consider following this article to migrate the init scripts into workspace files if a Databricks Runtime upgrade is not feasible. |

| Failed to migrate cluster from DBFS init script into UC volume init scripts. Error message: _. | The script failed to modify the cluster with updated UC volume based init scripts, and the reason is not one of those that we expected. This could be caused by issues like the cluster being created by a managed service like jobs or DLT, or if the current user lacks “CAN MANAGE” permission to the cluster. The error message should give more details on how to resolve this issue. |

| Failed to migrate job from DBFS init script into UC volume init scripts. Error message: _. | The script failed to modify the job with updated UC volume based init scripts, and the reason is not one of those that we expected. This could be caused by issues like the current user lacks “CAN MANAGE” permission to the job. The error message should give more details on how to resolve this issue. |